IoT Devices and The World’s Population

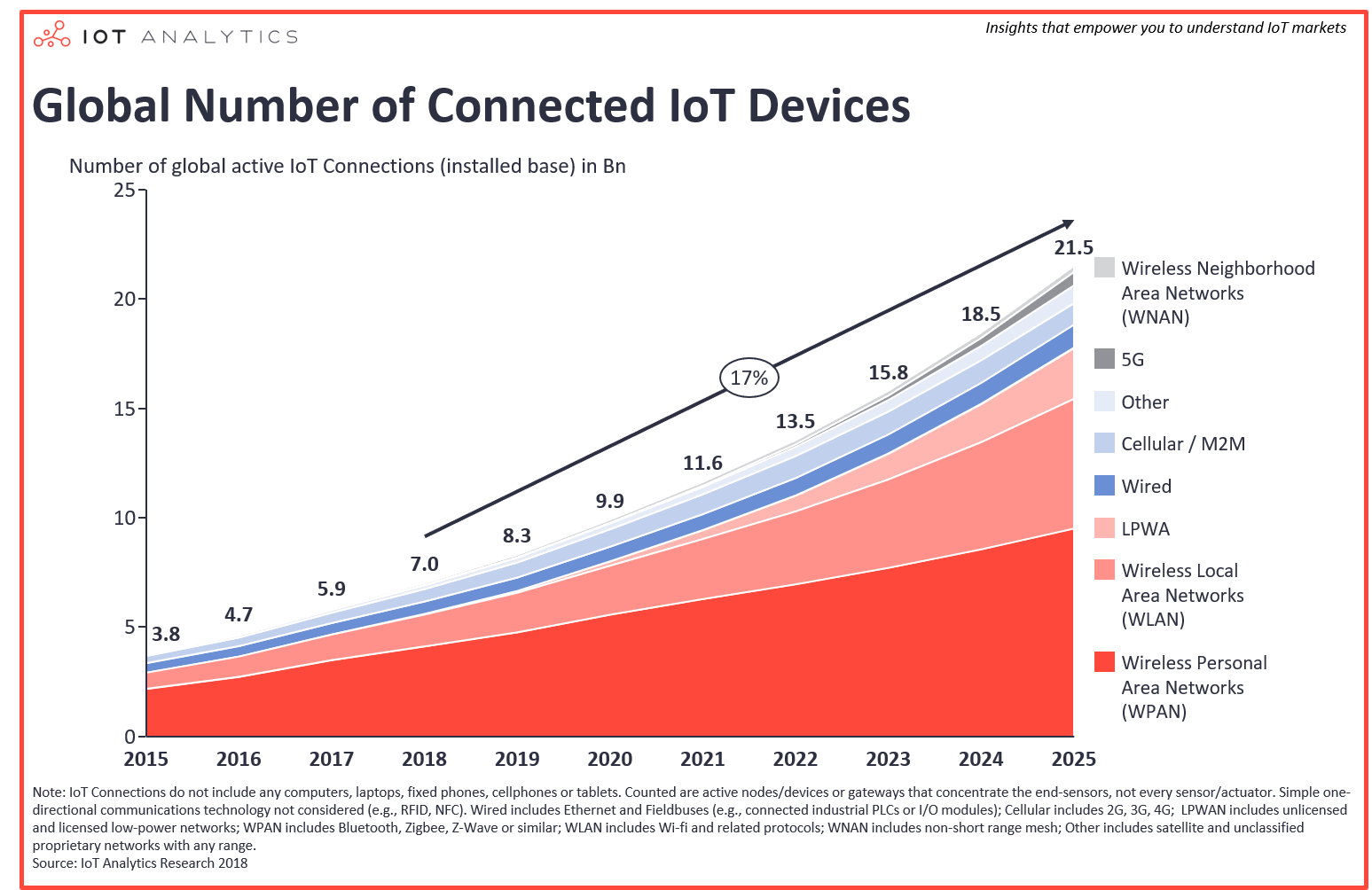

We live in a time where having a machine to merely perform a basic task, for an instance brewing a coffee, heating water is not enough. We want a smart solution which is user-friendly, intuitive and equipped with modern communication technologies e.g. Bluetooth, WiFi, NFC etc. We are surrounded by smart devices in home, office, train and city.These smart connected devices are a small part of Internet of Things. We have seen an exponential growth of connected devices in recent years and it is going to shoot up the sky in coming years. To put this into perspective , IoT Analytics estimated that there were 7 billion connected devices in 2017 and predicts, the number will rise to 22 billion by 2025. That will be almost 3 times the current world’s population.

Security Threats and Firmware Updates

With the growth of IoT devices, security threats and attacks associated with them have also increased multifold. Most of the IoT devices are deployed in the market on the principle of set and forget. The IoT vendors and manufactures do not facilitate a secure and seamless firmware update mechanism for the connected devices. asvin provides a platform powered by Hyperledger Fabric DLT technology to distribute security patches and software updates for IoT devices.

Iterative Performance Tests

Since the first demonstration, the prototype has contributed hugely in understanding of asvin platform and helped in gain immense insights about the used technologies and their interaction with each other. It offered an opportunity to bring the idea of asvin to life. It has been among the top most performer in tech meetups, security conferences, pitch events, tech demos etc. over a year now and asvin has expanded its horizon since then. asvin has received awards and garnered support from tech reviewers from Financial Innovation Business Conference (FIBC) 2019 in Tokyo, Japan to COHORT 5 in Dubai, United Arab of Emirates. We have been able to leave our footprints in major the cities of Asia and Europe.

It is paramount to stress test any IoT platform for performance and asvin is not an exception. The performance of the platform has been a critical aspect for asvin since the inception. Having done with the prototyping phase the next logical step for asvin was to stress test its platform for performance, scalability and resilience. From prototyping, we got the confidence that what we drew on whiteboard one day works well today, but a question was lingering in front of us, ‘is asvin’s platform capable enough to operate efficiently in the midst of exponentially growing number of IoT devices?’. To validate the platform and clear all the doubts, we ran iterative experiments on asvin using Fed4FIRE+ testbeds. The experiments were conducted to evaluated and improve performance of asvin’s platform over a period of 3 months.

Test Setup

We used multiple tools and technology to perform a highly advanced, efficient and sophisticated experiments using Fed4FIRE testbed, Virtual Wall. The following sections details the test setup.

jFed

JFed is a computer application which provides user friendly interface to interact with remote testbeds hosted by Fed4FIRE. The resources available on multiple testbeds can be provisioned and managed by it efficiently.

The requested resources can be configured using Resource Specification(RSpec). These configurations mainly fall in 3 categories.

- Disk images

- Operating systems e.g. Windows, Ubuntu, Debian etc.

- Network

- Public Internet access, network impairment (latency, delay, bandwidth)

- Storage

- Permanent storage, increase root partition, extra disk space etc.

- Permanent storage, increase root partition, extra disk space etc.

An Experiment specification(ESpec) is an extension of RSpec format. It can be utilized to bootstrap an experiment, for an example automatically run commands, transfer files to and from nodes.

ESPec Generator

The Espec file format is used to automate Kubernetes cluster installation on testbed nodes. It is possible to generate ESpec file format which contains information such as upload and run a script file on master node and worker nodes, dependency installation with sudo access etc. We used a program written in python to generate ESpec file format for a desired cluster configuration. It has multiple options to set properties in a cluster, for example number of worker nodes, target Virtual Wall testbed etc. It gives the output as a tar file, which consists ESpec file format, scripts and other configuration files to develop Kubernetes cluster. From here on jFed experimenter can be utilized to load the tar file and provision required resources to build Kubernetes cluster.

Kubernetes Cluster

We needed an efficient production grade container orchestration system which can be utilized to deploy, scale and manage container applications. The Kubernetes was the best fit for the solution. Kubernetes cluster is a collection of two types of nodes. These nodes are characterized as following

- Master node

- Worker node

A worker node can be a virtual or physical machine. Each worker node is comprised of multiple services for instance kubelet, kube-proxy etc., which are essential to run container application (pods). A high-level view of the architecture is illustrated in the following diagram.

A Kubernetes master is a controller node. It manages all the worker nodes in a cluster. A master node makes use of kubelet service running on each worker node to manage pods. An end user is provided command line interface named, kubectl to manage Kubernetes cluster. A Kubernetes user always communicates with master node which in the background executes commands and tasks on worker nodes.

Control Server

At this stage, we have a Kubernetes cluster running on desired testbed. As mentioned earlier kubectl tool can be used to schedule a task on worker nodes, but it is not scalable for a large experiment. On top of that Kubernetes cluster can only pull images from Docker registry, but to test asvin.io we need a custom docker image. To solve both the problems, we used a Control server with a web interface.

It performs three important tasks.

1. Build Docker Images

The server hosts a private Docker registry where user’s custom docker images are stored. The Docker program is installed on the server and the Docker Registry 2.0 image is run. The port 5000 on the container is exposed so that a user can build, and store a docker image and all Kubernetes worker nodes can access custom images from the private registry. A user can use the web interface of the control server to upload, build and store a custom image. It lets a user upload an image as gzipped tarball file.

2. Control Experiment

To schedule a task on Kubernetes cluster a user can start an experiment on control server. This experiment is comprised of following properties.

- A name tag of a docker image

- Parameters to pass to the command running in a container

- Number of containers

- Number of threads in a container

The aforementioned parameters are taken in to account to create and run a Kubernetes job on the cluster.

3. Monitor Experiment

An experiment without some findings is not useful at all. It is important to rate an experiment on some performance metrics. These metrics are decided before the start of an experiment. It is very important to gather data while performing experiment to take effective decisions. For asvin.io experiment, we used influxDB and Grafana to monitor and induce some insights. Both the programs were installed on the control server using the ESpec file format. The output of the experiment was time series. All pods running on the Kubernetes cluster stored result in influxDB database. Grafana was used to query the database and visualize the results in graphs format.

Results

The Fed4FIRE+ experiments were very productive for asvin.io. At the start of the experiment, we set certain goals and objectives to achieve in the specific timeline from the whole activity. We were able to stick to those deadlines and accomplish the milestones. The most important metrics to assess the outcome of the experiments were number of updates per seconds and time taken for a running container to ping asvin servers, verify details on blockchain server and download firmware from IPFS server. The following subsections shed more light on the results.

Scalability and Resilience

The scalability and resilience to our solution are the top most priorities. We have ensured this using a very compact and efficient design. We wanted to put asvin.io in the furnace to test it for aforementioned properties. We started an experiment by deploying 1 docker container on Kubernetes cluster of 100 nodes. Each container had 10 parallel threads. The aim was to scaleup to 1000 docker containers which would model 10,000 – 100.000 physical IoT devices. We achieved this goal and the asvin.io platform performed exceptionally well in stress conditions. We monitored the experiment by collecting data on number of requests originated from per container on Kubernetes cluster.

In the above graph different colors represent distinct docker containers. It shows the number of requests made per second per container to asvin servers. The next graph illustrates the total number of requests handled by asvin.io.

Each thread in a container constantly makes curl requests to asvin.io servers, checks for new firmware version available on the version server and downloads the new firmware from blockchain server. We tracked the number of firmware updates performed by asvin.io servers. It gives a crystal-clear image of scalability and robustness of the architecture.

The following graph displays total number of firmware updates performed on testbeds. It demonstrates the capability of the asvin servers. The servers can handle the large number of requests from IoT devices and update their firmware at the same time.

Performance Optimization

We performed the Fed4FIRE+ experiments in the iterative manner. In every iteration some of the server parameters were fine tuned to improve performance of the platform. We measured response time. In the experiment, response time is defined as time taken to send request to server, verify details and download firmware. An IoT device polls for new firmware version to the Version Controller Server, then sends a request with version number of a new firmware to Blockchain server, the server verify the details and send a firmware details to the device which are utilized to download firmware from IPFS server. In the beginning the average response time was almost 3s per request. The following diagram illustrates.

The response time was improved by iterative experiments. It was brought down to 1.8s. It is demonstrated in the following diagram.

Conclusion

We generated enormous insights from the experiment about asvin platform. asvin is an amalgamation of cutting edge technologies such as blockchain, IPFS etc. The platform is capable of handling high number of IoT devices . With the success of the experiment, asvin was selected by Fed4FIRE+ to demonstrate it at the fifth Fed4FIRE+ Engineering Conference(FEC) in Copenhagen, Denmark. asvin has a seal of confidence and validity from a project backed by European Union.