As promised, I am back with another installment in the asvin blog series on the Fed4FIRE+ Stage 2 Experiment. If you have been following the series, you already understand asvin‘s objectives, our set-up, and the initial results as found in the Fed4FIRE+ Stage 2 Experiment and Blockchain posts. In this edition, I am sharing the second pillar of the asvin platform, the IPFS server and Fed4FIRE+.

InterPlanetary File System

asvin uses the InterPlanetary File System (IPFS) protocol to store firmware and security patches. IPFS is a content-addressable, peer-to-peer method for storing and sharing hypermedia in a distributed file system across the network infrastructure to store unalterable firmware data. As a content-addressable protocol, IPFS also eliminates the issue of file redundancy across the network.

IPFS server complexities are abstracted using the intuitive Customer Platform which allows customers to manage security patches, devices, and rollouts. When a customer uploads a firmware file or security patch on their Customer Platform, it internally calls an API endpoint to store the firmware file on the IPFS server. The IPFS server then generates a hash based on the firmware content called a Content Identifier (CID), then the IPFS-generated CID is stored on an immutable distributed ledger. Later, edge devices will use the CID to retrieve the corresponding firmware file from the IPFS server. In principle, IPFS uses Merkle DAG for data structure. This blog explains what happens ‘under the hood’ when files are added to the IPFS.

Once customers create and schedule rollout on their Customer Platform, edge devices will continuously monitor for the new rollout on the Version Controller. At the predetermined rollout date, edge devices retrieve the firmware ID from the Version Controller and then download the critical firmware metadata from the Blockchain Server. The metadata includes the CID, the firmware message digest (MD), etc. The CID is employed to download the firmware file from the IPFS.

In our experiment, asvin generated an onslaught of firmware upload and download API traffic to test the IPFS server’s ability to process the congestion without latency.

Experiment results

The IPFS server displayed impressive results during the high traffic; asvin calculated server robustness using the total number of requests, successful and failed, the response time of each request, and server usage. Experiment outcomes have been thoroughly documented in the following section.

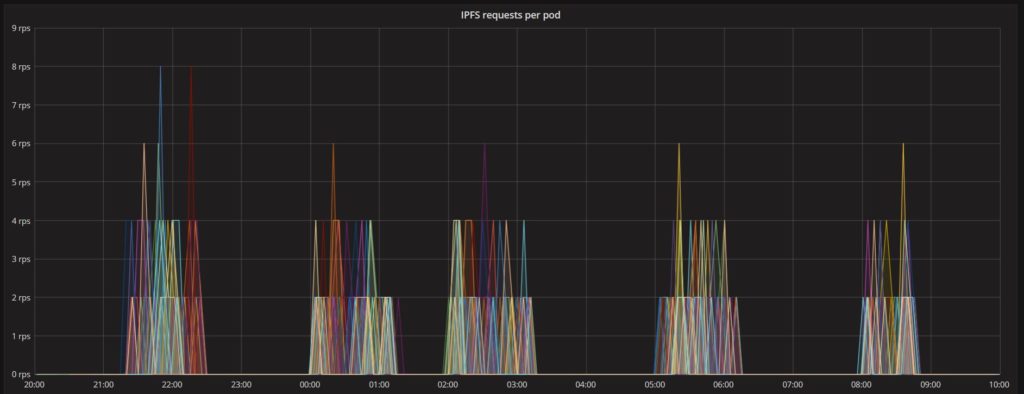

We’ve used different colours in the graph to represent distinct Kubernetes pods. Each pod ran several threads, each representing a virtual IoT edge device that periodically issued polls for updates scheduled in the asvin platform. The IoT edge device securely downloads the new firmware from the IPFS server using its corresponding CID as fetched from the Blockchain server. The graph below shows the number of requests made per second, per pod to the IPFS server. The IPFS server function was faultless while handling the soaring requests from thousands of devices.

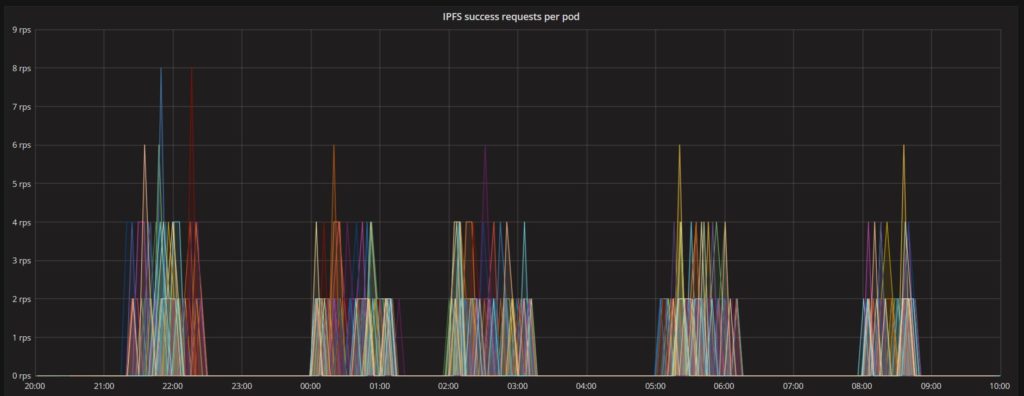

The next graph illustrates the total number of successful requests handled by the IPFS server. There were no failed requests.

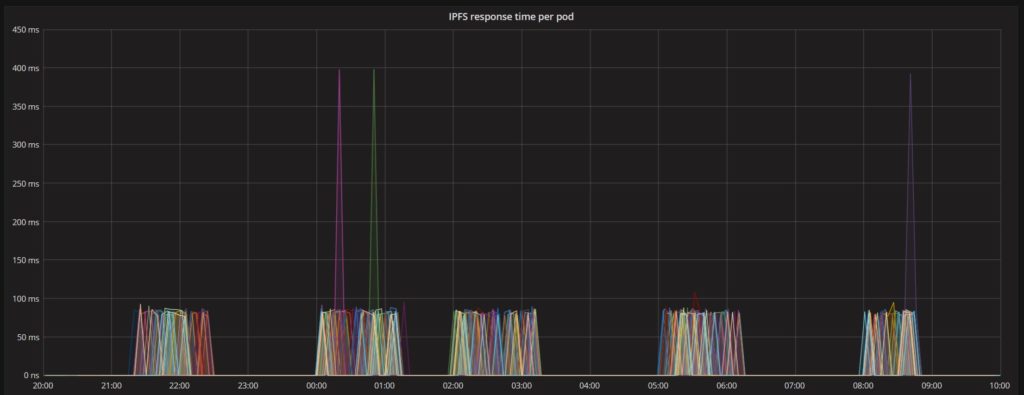

As part of the performance evaluation, we calculated the response time of each request sent by edge devices to the IPFS server. The server successfully scaled in response to the high volume of traffic issued by several IoT devices and demonstrated remarkable resiliency. The total response time for each request is recorded in the device application program, which is stored in the influx DB for performance evaluation. Further analysis of the asvin platform and this experiment can be found, written in Python, on our GitHub repository.

Each request-response time experienced notable improvement in Stage 2 over the Stage 1 experiment. The figure below represents the IPFS response times. Even though firmware downloads were being requested by many devices simultaneously, the server response time demonstrates that it can handle a large number of requests efficiently with most handled within 90 milliseconds.

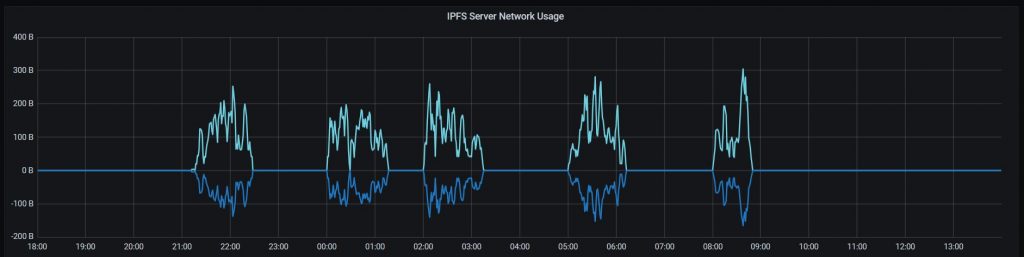

We also performed the experiment limiting the bandwidth and increasing server-side latency to examine the efficiency of the IPFS server. The next graph demonstrates the changing network bandwidth usage with corresponding response times. For clarity, how new firmware download requests affected the bandwidth used by the IPFS server are represented as a positive for incoming traffic while outgoing traffic as a negative. The asvin platform property of horizontal pod scaling provides seamless adaption for high traffic and load balancing.

Conclusion

asvin gathered an enormous amount of data from the Fed4FIRE+ experiment. Our analysis has allowed us to improve the scalability and resilience performance of the asvin platform in combination with IPFS servers. The platform horizontal pod scaling makes it easy to accommodate high-traffic and balance the load. The Stage 2 experiment results assert that despite changes in network bandwidth and latency to the IPFS server, the distributed and decentralised nature of the asvin platform and IPFS servers ensured that the firmware distribution from IPFS remained smooth and seamless.