AI makes assume breach the new reality

Malicious AI code evolves too fast for patches to protect critical infrastructure

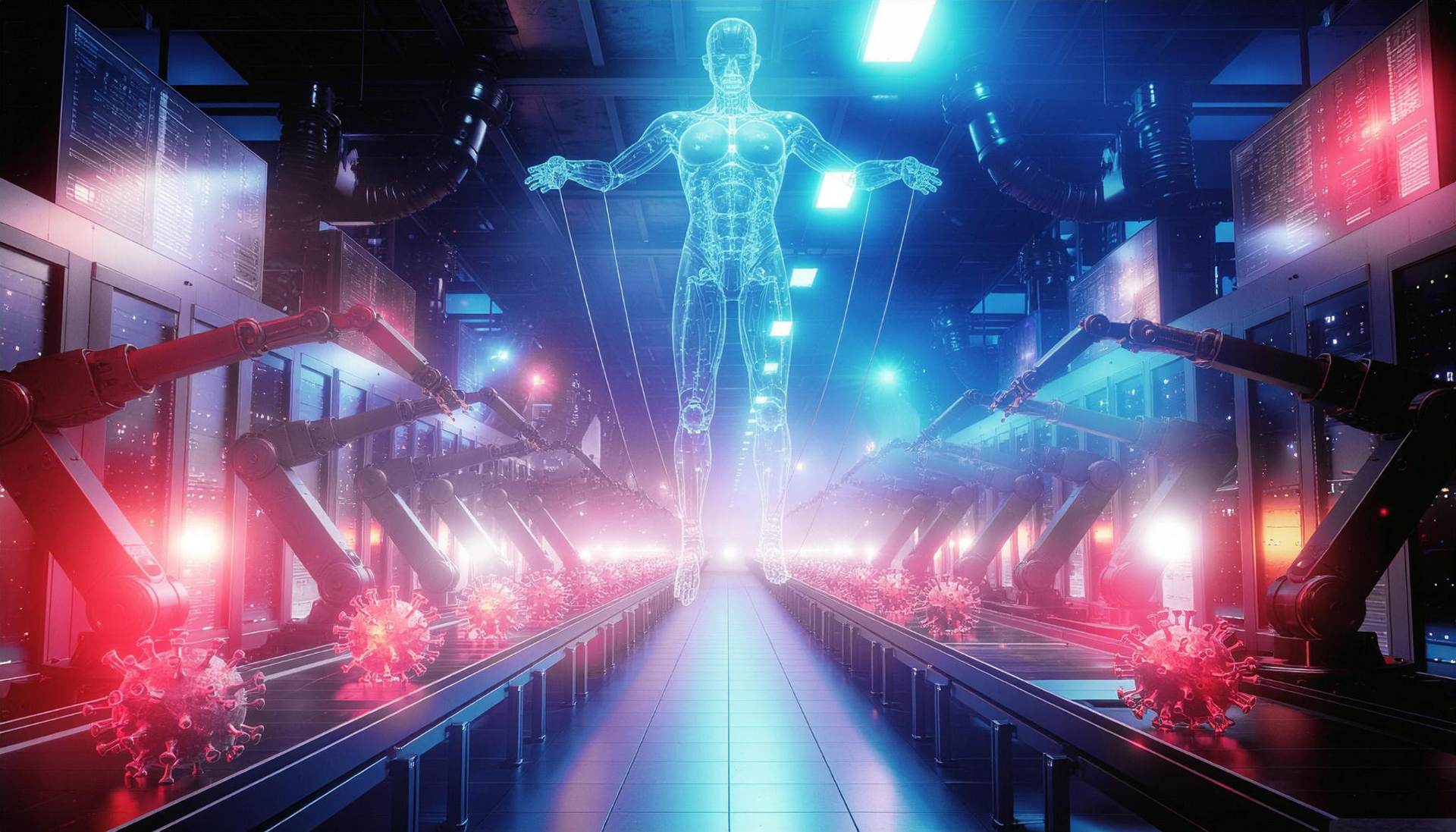

AI is making “assume breach” the new reality.

When AI generates malicious code in a fraction of a second, patches and updates are no longer sufficient to effectively protect our critical infrastructure.

Disclaimer:

In its latest report, “THE STATE OF IT SECURITY IN GERMANY 2025,” the German Federal Office for Information Security (BSI), Germany’s top cybersecurity authority, concludes:

“The worse an attack surface is protected, the more likely a successful attack becomes. In contrast, consistent attack surface management – such as restrictive access management, timely updates, or minimizing publicly accessible systems – directly reduces the risk of successful attacks.”

This statement falls far short of the mark in view of the unchecked spread of AI. Attackers are either already inside or in the process of bypassing even the best defenses (assumed breach). The term “prompt update” will become less relevant as a protective measure in the coming weeks and months due to the possibilities of AI-automated attacks, as automation reduces the time window between the discovery of a vulnerability and its exploitation by AI attacks to a few minutes or even seconds. This does not mean that we should abandon system security maintenance through patches and updates, but that we should no longer be under any illusions about the success of securing a system through such practices.

Why AI leads us to believe that systems are already compromised

The landscape of cybersecurity is changing at an alarming speed due to AI.

In the era before LLMs, writing malicious code for vulnerabilities, testing it, and applying it was a time-consuming, mostly manual task performed by highly specialized red teams. Today, powerful AI models can be used to automate and scale a large part of the manual work involved in pentesting. In particular, the AI-based generation of malicious code – from polymorphic malware to the automated discovery of vulnerabilities – exacerbates a fundamental problem: an increasingly narrow window of opportunity to close vulnerabilities through updates and patches

Polymorphic malware on the assembly line thanks to generative AI:

AI models, especially large language models (LLMs), can not only generate code, but also cause it to mutate constantly.

Polymorphic malware continuously changes its signature and appearance without losing its core function. This makes it invisible to classic, signature-based security tools. A known pattern that was detected yesterday is already a new, unknown variant today. The LLM can mutate malicious code at almost any speed and generate new variants with new signatures. As a result, the training of signature recognition in antivirus software and intrusion detection systems is increasingly flooded with new malicious code variants. By the time the defense systems are trained and delivered, their information base for signature recognition is already outdated.

Automated hunt for zero-day vulnerabilities:

LLMs in particular can analyze huge amounts of code and find vulnerabilities based on learned error patterns. As Anthropic’s models have already successfully demonstrated, AI can automate this process and search specifically for zero-day vulnerabilities. And in doing so, generate security vulnerabilities that are still unknown to the developers themselves and for which there is therefore no patch yet. In the near future, these AI-automated attacks will logically lead to an “automated zero-day attack factory.”

The AI dilemma of zero-day exploits:

By definition, a zero-day exploit is an attack that takes place before a patch or update is available.

This means:

The new security premise must be “assume breach.”

Given this reality, the “patch and update” paradigm of cybersecurity must change. We can no longer assume that our systems are secure just because all patches have been installed.

Instead, we must internalize the status “assume breach”: always assume that your systems have been compromised.