As part of the asvin blog series on the Fed4FIRE+ Stage 2 Experiment, this new blog presents the experiment results of enhanced third pillar of asvin BeeHive architecture, Version Controller. The blog extends Fed4FIRE+ experiment result documentation blogs Fed4FIRE+ Stage 2 Experiment – Blockchain and Fed4FIRE+ Stage 2 Experiment – IPFS.

Version Controller

To manage billions of IoT edge devices, asvin BeeHive IoT update distribution employs a distributed service cluster infrastructure. For edge devices this complexity is invisible, and they interact with the cluster as they would do with a single machine. The version controller consists of multiple instances. Each instances in the cluster is fully functional server and can serve a request independently. Each node has different IP address, but they are hidden to edge IoT devices. An abstraction layer is used on top of the cluster to hide complexity. This abstraction layer makes use of round-robin DNS technique for load balancing, fault tolerance and load distribution. The server accepts DNS requests and responds to them by forwarding them to a computing machine in the cluster. A machine from the cluster is chosen in round-robin fashion.

The server performs following tasks.

- Response to IoT Edge Devices

In order to register on the asvin BeeHive IoT update platform, the edge devices send requests to version controller with required data. The IoT edge devices also poll the Version Controller to check for new update. The server responds to edge devices with information of valid firmware and roll-out id if any update is scheduled.

- Latest Firmware Version List

It maintains real time information of different versions of firmware available on data storage servers. The Version Controller has a list of available firmware on asvin BeeHive update distribution platform for all IoT edge devices. It keeps the list updated in collaboration with Customer platform.

Experiment results

The Fed4FIRE+ experiment results demonstrate the key features of Version Controller such as high-availability, scalability, and efficiency. Multiple parameters such as total number of requests (successful and failed), response time of each request to the server are utilized to assess the platform. Experiment outcomes have been thoroughly documented in the following section.

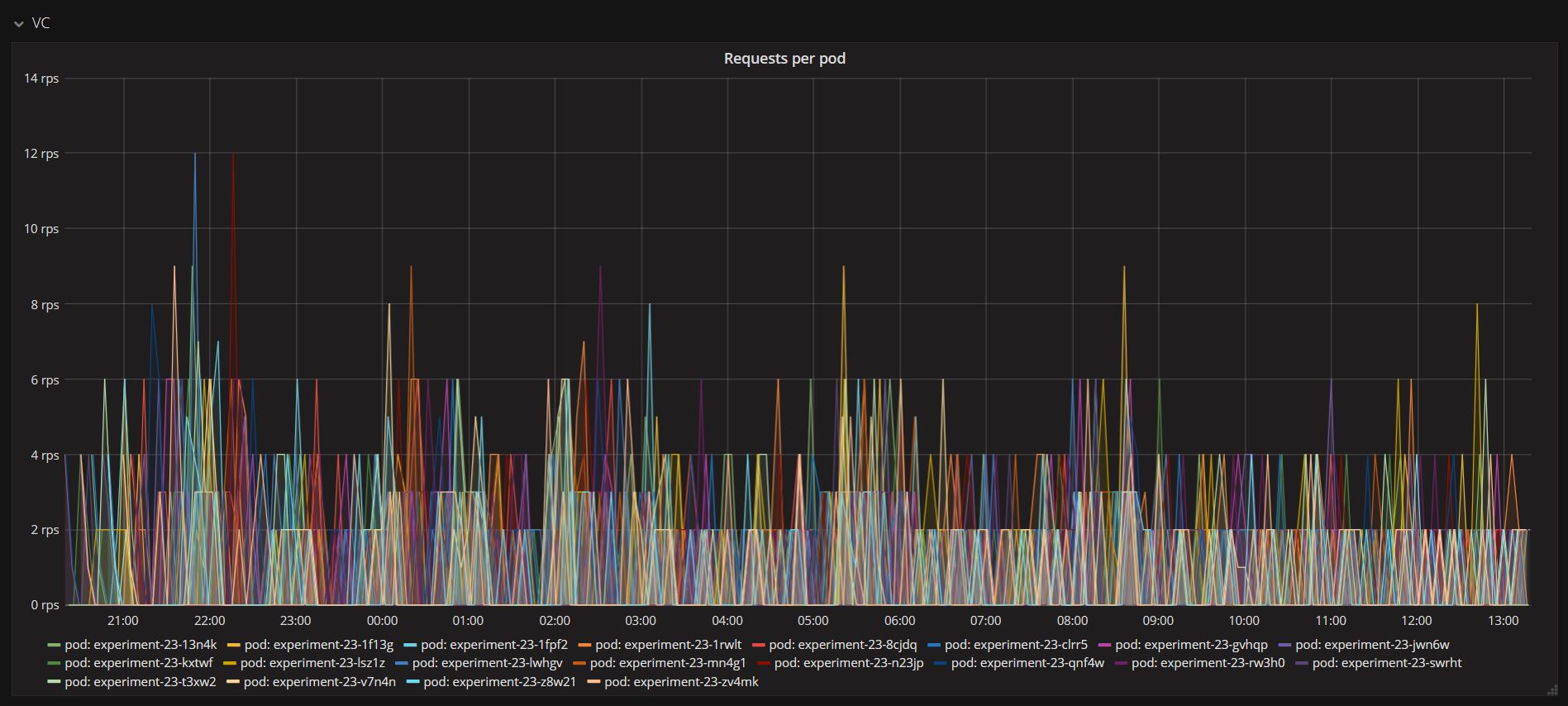

In the stage 2 stress test, Kubernetes architecture is employed to deploy thousands of virtual IoT edge devices. The Kubernetes pods are represented by different colors in the following graphs. The pods ran a python script which utilized multi-threading to create multiple virtual devices. Each thread represents an edge device. These virtual edge devices are programmed to periodically poll the Version Controller to fetch details of scheduled rollouts. In the response, the devices receive rollout and firmware ids, which can be used for further operations involving firmware download and update as explained in the previous Fed4FIRE+ Stage 2 Experiment – Blockchain and Fed4FIRE+ Stage 2 Experiment – IPFS blogs. After successful update of firmware using asvin BeeHive OTA procedure, these devices send update success status to Version Controller to update the firmware details on the Customer Platform and Blockchain server.

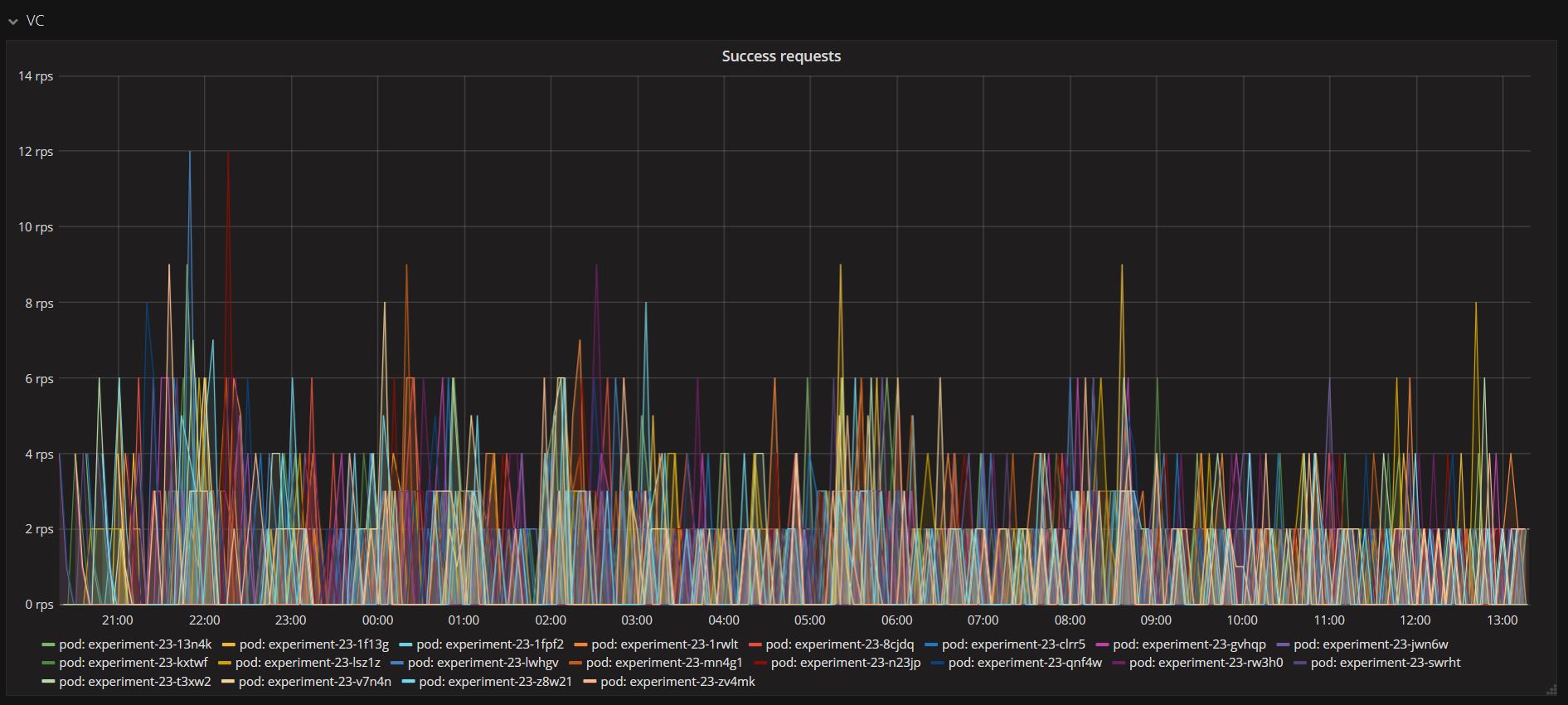

The above graph shows the number of requests made per second per pod to the Version Controller. These different pods represent multiple virtual edge devices running as threads. The Version Controller handled all the incoming requests without fail. Below figure displays all the successful requests handled and served by the Version Controller. This demonstrates the high-availability of the server during high traffic of incoming requests. The cluster is highly scalable.

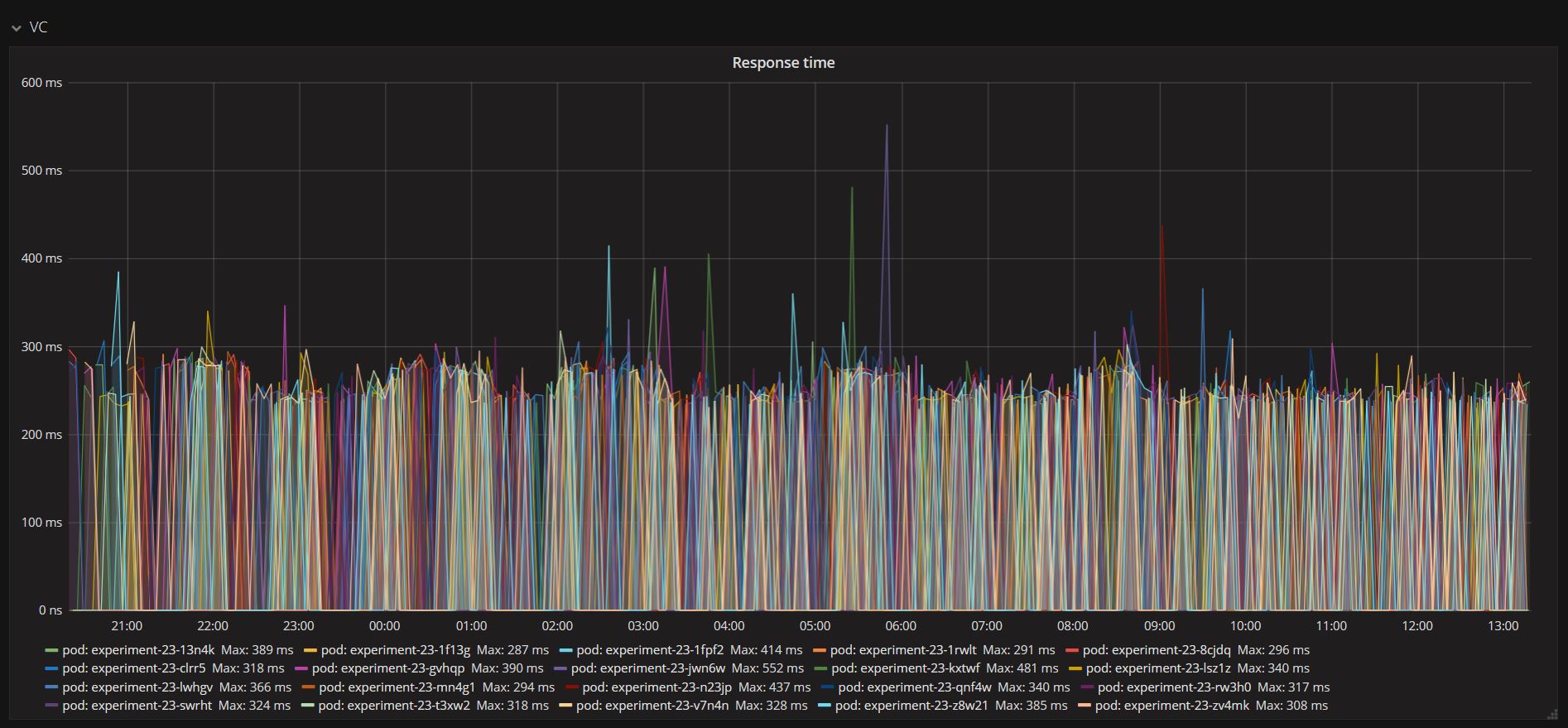

The response time experienced notable improvements in Stage 2 compare to the Stage 1 experiment. The figure below represents the Version Controller server response times. Even though next rollout details were being requested by many devices simultaneously, the server response time demonstrates that it can handle a large number of requests efficiently with most handled within 250 milliseconds. This high efficiency is the result of the distributed workload among multiple instances in the cluster. It gives boost to the network performance, reduce collision and avoid congestion during high demand.

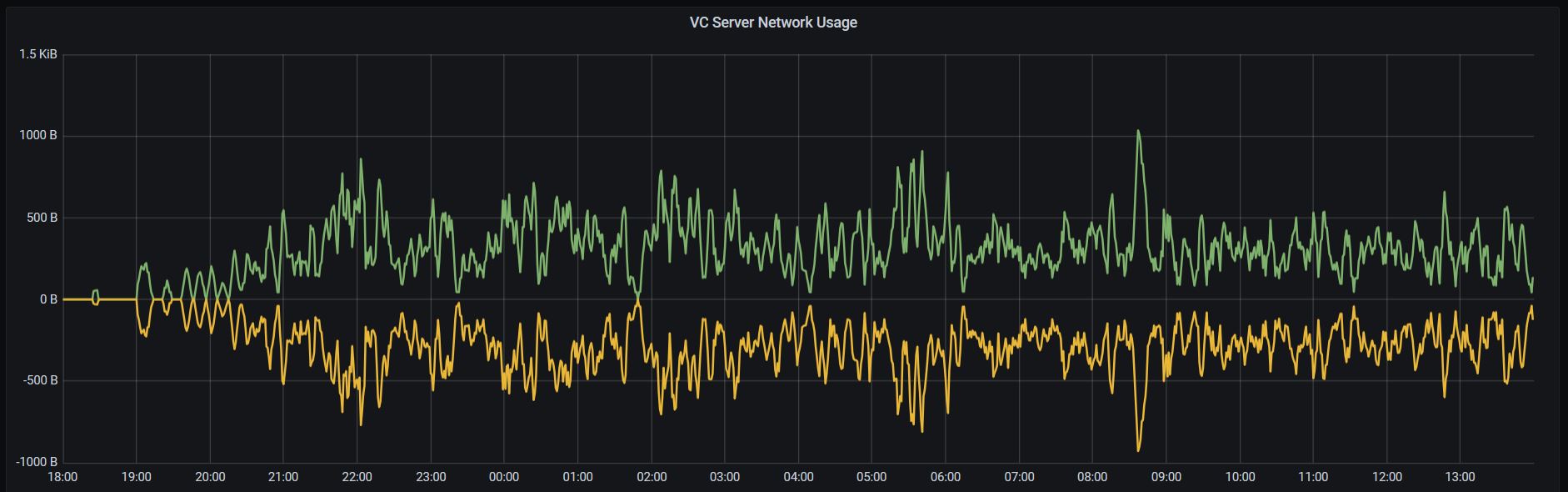

Furthermore, the Version Controller performance was examined by limiting the bandwidth and increasing server-side latency. The next graph demonstrates the changing network bandwidth usage with corresponding requests and responses. For clarity, how check rollout and update success requests affected the bandwidth used by the version controller server are represented as a positive for incoming traffic while outgoing traffic as a negative. The asvin BeeHive update distribution platform property of horizontal pod scaling provides seamless adaption for high traffic and load balancing.

Conclusion

The outcomes of Fed4FIRE+ stage 2 experiment prove key features of the asvin’s Version Controller, which are high availability, scalability, and efficiency. The distributed nature of the Version Controller provides defense against single point failure. Even if an instance in the cluster goes down because of hardware or software problem, the asvin framework stays functional. We have gathered an enormous amount of data from the Fed4FIRE+ experiment. Our analysis has allowed us to improve the resilience and performance of the asvin Beehive patch distribution platform in combination with Version Controller.

Start with asvin Register now